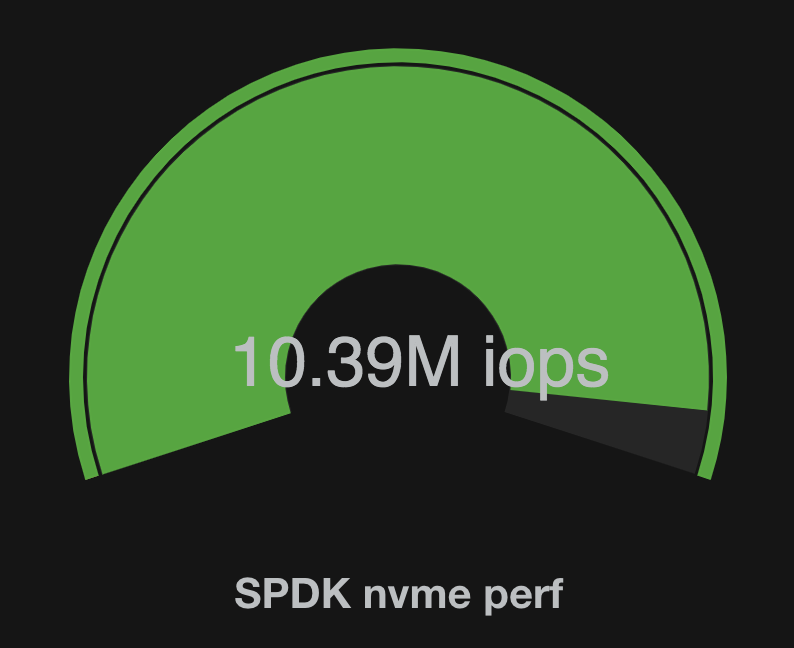

10.39M Storage I/O Per Second From One Thread

Yes, you’re reading that right. That’s 10.39 MILLION 4KiB random read I/O operations per second on a single thread. Mind blown? Don’t believe it? This blog post will explain exactly how we’ve done it.

Introduction

For those of you joining us from outside the SPDK development community, SPDK is a set of user space C libraries that re-imagine how a block storage stack should operate. It contains much of the same functionality you’d find in a traditional operating system block storage stack, from device drivers all the way up to logical volume management. SPDK was designed from the beginning to support what we call “next generation” storage media, such as Intel Optane™ devices. Designing for low latency and high throughput is what this project is all about. There is a lot of reading material over at SPDK’s main documentation page. In particular, check out the Introduction and Concepts sections.

The following will explain the techniques and strategies that SPDK employs to achieve what we feel are mind-bogglingly great performance numbers. We’re going to start the series by focusing on the lowest level first - the user space NVMe driver. We’ll show some benchmarks where SPDK is performing more than 10 million 4KiB random read I/O per second, on real hardware available today, using just a single thread. Later posts will move up the block stack, exploring the performance of SPDK when using the generic block storage API, logical volumes, and ultimately over the network with NVMe-oF or to a virtual machine using vhost.

What you’ll find here is (hopefully) deeply technical. We’re going to be discussing very low level hardware concepts - the CPU cache, PCIe, MMIO, Direct I/O, etc. - and how they drive our software designs. So grab a cup of coffee and settle in.

BIG NUMBERS

We love big numbers, so let’s start with those:

4KiB random reads at queue depth 128 to each device.

| Configuration | |

| CPU | Intel® Xeon® Platinum 8280L CPU @ 2.70GHz |

| Memory | 12x 16GB 2667MHz DDR4 |

| Storage | 21x Intel® SSD DC P4600 1.6TB |

| Platform | Intel® Server Board S2600WFT |

| BIOS | SE5C620.86B.0D.01.0250.112320180145 |

| OS | Linux Fedora29 5.0.0-rc6+ |

| Hyperthreading | ON |

| Turbo | ON |

| Turbo Frequency | 4.0GHz |

That’s 10.39 million 4KiB random read I/Ops using a single thread. While expensive enough that this probably isn’t your home gaming rig, note that it’s composed of commercial, off the shelf products. It’s a regular Intel® Xeon® server platform with a bunch of NVMe SSDs plugged into it.

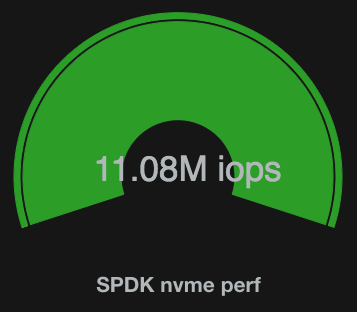

Just for kicks, we also ran 512B random reads to 20x Intel® Optane™ SSD DC P4800X drives.

512B random reads at queue depth 32 to each device.

The most amazing part of this second benchmark is that the average latency per I/O is just 57us.

| Configuration | |

| CPU | Intel® Xeon® Platinum 8280L CPU @ 2.70GHz |

| Memory | 12x 16GB 2667MHz DDR4 |

| Storage | 20x Intel® Optane™ SSD DC P4800X 375GB |

| Platform | Intel® Server Board S2600WFT |

| BIOS | SE5C620.86B.0D.01.0250.112320180145 |

| OS | Linux Fedora29 5.0.0-rc6+ |

| Hyperthreading | ON |

| Turbo | ON |

| Turbo Frequency | 4.0GHz |

So How’d We Do It?

Now for the fun part! We’re going to dive into every facet of exactly why SPDK is able to hit this number. However, if you’re not already very familiar with how I/O is submitted to an NVMe device, you may want to start here: Submitting I/O to an NVMe Device.

We set out with the wild goal of 10 million NVMe I/O per second on a single Intel® Xeon® core. The processor we used for testing was an Intel® Xeon® Platinum 8280L (Cascade Lake) which runs at 4.0GHz under turbo. This means we have only 400 core clocks (100ns) to work with for every I/O. This is, obviously, a really small budget. But we were able to hit our goal with a little room to spare.

The first order of business was determining which benchmarking tool to use.

Ultimately, we had to use the SPDK NVMe perf tool

(in examples/nvme/perf). SPDK has an fio plugin,

but fio itself simply wasn’t designed to get to

this I/Ops rate. SPDK’s NVMe perf tool has a comparatively reduced feature set,

but is designed specifically to get to this level of performance.

However, even the most optimized benchmarking tool still has its limits. For

example, a divq operation is required for every I/O as part of determining the

random LBA to read from the SSD. This operation alone consumes 6% of the CPU

cycles in our test! So that’s already cut into our 400 CPU cycles budget substantially, but

we’ve managed to hit our target number anyway.

There are four key things to think about when designing a storage stack for performance:

- No cross-thread coordination (locks, etc.).

- Poll instead of interrupt.

- Minimize MMIO.

- Get the important things into the CPU cache at the right time.

SPDK, by design, assigns NVMe queue pairs to threads. Because NVMe has multiple independent submission queues, we can get away with this. That solves problem 1 above entirely, so we won’t spend much time discussing that. As an aside, operating system drivers can’t assign queue pairs to threads because the lifetime of the operating system driver is much longer than any given thread and there may be many more threads on the system than available queue pairs. So operating systems typically assign NVMe queue pairs to CPU cores and take locks to coordinate with the threads on that CPU core. This minimizes lock contention considerably, but doesn’t eliminate it. SPDK, as a user space application, is able to directly assign queue pairs to threads and avoid the locks entirely. This results in a very minor, but measurable, performance difference.

Important Compiler Options

Digging through the results of perf and meticulously hand-optimizing assembly

is tons of fun (well, for us anyway), but it takes a lot of effort. Before doing

that, it’s important that we take advantage of all of the automatic

optimizations available in our compilers. SPDK supports both clang and gcc, but

for the purposes of this blog post we’ll focus on gcc. Specifically:

gcc (GCC) 8.3.1 20190223 (Red Hat 8.3.1-2)

Using an up to date compiler is important, especially if you plan to run SPDK on newer hardware.

Currently, SPDK uses -O2 as the optimization level. We tried -O3, but it doesn’t improve the performance in our tests and makes debugging and profiling more difficult.

SPDK has two additional performance options that can be enabled

through SPDK’s configure script:

- Link Time Optimization

- Profile Guided Optimization

LTO

Link time optimization allows the linker to run a post-link optimization pass on the code. This allows the linker to inline functions across compilation units. For the SPDK NVMe driver, there aren’t many cross-compilation unit calls to begin with, so the benefit is minimal. However, for much of the rest of the code in SPDK this can make a big difference. For example, SPDK has an environment abstraction layer for system-level operations not provided by POSIX such as allocating DMA-safe memory. This abstraction layer is pluggable at compile time, so all of the implementations of these functions are in a separate compilation unit. These functions are usually a wrapper around a call into DPDK, which means they’re a couple of lines of code each. Link time optimization will correctly eliminate these thin wrappers around the DPDK calls for us, without forcing us to resort to ugliness like putting the implementations all in a header file. SPDK really likes modular components with well defined interfaces, and link time optimization lets us do that without worrying too much about additional overhead.

Link time optimization can be enabled in SPDK by doing the following:

./configure --enable-lto

make

PGO

Profile guided optimization is a mechanism for compiling the code such that it outputs profiling data. Then, benchmarks can be run to generate that data which is in turn used to re-compile the code and make better optimization decisions. SPDK supports this using this sequence of steps:

./configure --enable-pgo-capture

make

<run benchmark>

./configure --enable-pgo-use

make

The benchmark can be anything that stresses the interesting code paths. In

practice, we see profile guided optimization dividing all of the I/O path

functions into a “hot” version and a “cold” version internally, and then trying

to streamline the hot version as much as possible. Profile guided optimization

can also be combined with link time optimization, so often the “hot” functions

will get inlined into each other and ultimately across compilation units. The

SPDK NVMe perf benchmark tool tends to end up with a very shallow call-stack on the

I/O path due to this effect.

For the benchmark results reported here, SPDK was using link time optimization but not profile guided optimization. Profile guided optimization can result in better performance, but requires the rigorous development of a benchmark suite that may not be optimal for every user. We didn’t feel it was fair to report numbers using that technique, but did want to call attention to it here since it is available and easy to use.

Don’t Interrupt Me!

Disabling interrupts is the oldest trick in the high speed I/O device toolkit and its use is spreading to storage stacks in major operating systems as well. Handling an interrupt is very expensive because it requires the CPU to stop whatever it was doing, swap out the stack, swap in the interrupt handler, execute it, swap it back out, and then resume what it was doing. That’s not a fast operation, and it’s not friendly to the CPU cache. When storage devices completed an I/O every couple of milliseconds, this was a great choice because it let the CPUs go idle while waiting. However, with an I/O completing every microsecond or less, we can’t stop to handle an interrupt any more.

Instead, SPDK completes all I/O by polling. Specifically, the user of the NVMe driver has to explicitly call spdk_nvme_qpair_process_completions() to check for completed I/Os. That doesn’t mean that SPDK applications busy wait though. The most efficient pattern is to multi-plex other work with periodically checking for I/O completions.

Tricks for minimizing MMIO

The NVMe specification is designed to significantly reduce the number of required MMIO compared to older specifications like AHCI. AHCI requires as many as 7 total MMIO operations (many of which are reads) per I/O, whereas NVMe requires an MMIO write only to ring the doorbell both on the submission and completion side. MMIO writes are posted, meaning the CPU does not stall waiting for any acknowledgement that the write made it to the PCIe device. This means that MMIO writes are much faster than MMIO reads. That said, they still have a significant cost.

Networking devices have been trail-blazing MMIO reduction techniques for many years, and NVMe and SPDK are heavily inspired by networking designs. In fact, SPDK’s name is very much intended to mirror DPDK. Historically, avoiding MMIO hasn’t been relevant to storage because there weren’t any storage devices capable of generating enough I/O per second to where the MMIO had any measurable impact. However, with SPDK and fast NVMe SSDs, that has now all changed.

When the CPU performs an MMIO write, a request is generated by the CPU that’s placed into a hardware queue to later be sent over the PCIe bus to the device. This queue can vary in size based on the specifics of the platform. Generally server platforms have a much deeper queue available. If the CPU generates too many MMIO operations and overflows that queue, the CPU will stall and wait for a slot at the end of the queue to open up.

The first strategy we implemented to minimize MMIO was done inside the function spdk_nvme_qpair_process_completions(). That function walks through the completion queue entries in an NVMe queue pair’s completion queue and checks for entries whose phase bit has flipped. For each entry found, we need to update the completion queue head doorbell. To avoid an MMIO per completion, we wait to write the doorbell until we’ve built up the whole set of outstanding completions for this function call, then ring it at the end. This is a fairly standard practice - almost every NVMe driver does this today.

However, SPDK can do much better by taking advantage of the fact that it’s polling. By setting an option on the NVMe queue pair, a user can indicate that they only want to ring doorbells inside of calls to spdk_nvme_qpair_process_completions(). Then, the user can call the various I/O submission functions, such as spdk_nvme_ns_cmd_read(), multiple times and then finally poll for completions and ring the doorbell. The I/O submission functions, in this case, are just building the command into the submission queue ring and returning. If a user submits 8 commands before polling, this reduces the number of MMIO writes on submission by a factor of 8. If the user submits 32 commands before polling, this reduces the number of MMIO writes on the submission side by a factor of 32, etc. This yields enormous performance improvements. For example, in the benchmark above we see only 2.89M I/Ops without it, versus the 10.39M we’re reporting with it.

The final and most advanced way to avoid MMIO occurs on the completion side. We have batched completion queue doorbell head writes within a single poll call from the beginning, but it turns out that we can delay that doorbell write considerably longer. In fact, we don’t actually need to tell the device that we consumed completion queue entries until the device needs some to become available, and the SPDK driver can easily calculate when that condition occurs. For example, if the completion queue ring has 1024 entries and the user submits 32 commands and they all complete, we don’t need to tell the device that we’ve processed those 32 completion entries. There are still 992 available for it to use anyway. As more commands are submitted, SPDK can simply do the math until it sees that the newly submitted commands won’t have a completion queue entry available for them, and only then ring the doorbell. However, under heavy load, such as in the 10M I/O per second benchmark we’re presenting in this post, each call to spdk_nvme_qpair_process_completions() is finding 30 to 50 completions anyway, so the more basic completion queue doorbell batching within a single function call is providing most of the value. This advanced technique doesn’t make much of a performance difference in this scenario, however we suspect it will have a bigger impact at lower queue depth when fewer I/O completions are reaped per poll call.

With all of these techniques combined, SPDK performs far fewer MMIO writes than most NVMe drivers and this is one of the largest contributors to SPDK’s high performance.

Maximizing The Impact of the CPU Cache

Small code is fast code because it fits in cache. To set the stage, the CPU has three layers of caches - L1, L2, and L3. Each level gets successively bigger but takes longer to access. L1 cache is further split into two parts, an instruction cache and a data cache.

Any time data is accessed, the CPU pulls in an entire cache line into the CPU cache. Cache lines are 64 bytes on the CPUs we’re focusing on. Since the cache is limited in size, pulling in a cache line typically requires evicting another one that we’ll probably need later. We spend a lot of time trying to eliminate cache line thrashing like this.

There are a couple of tricks in the SPDK NVMe driver we’re employing to help

here. The most basic technique is structure packing, where we rearrange the data

layout of the structs to try and get as much data into each cache line as

possible. We make extensive use of the pahole utility from the dwarves package

to look at our data structures, find extra padding inserted by the compiler, and

rearrange to make sure all of the holes are filled. Rearranging is better than

instructing the compiler to pack the structure because rearranging maintains

data alignment. The pahole tool output looks like this:

The right hand side shows the size of each data member and it’s offset into the current structure. It also highlights where the cache line boundaries are located.

However, we often get considerably more crafty than simply packing. For example, most code in a device driver is written to handle corner cases such as errors. We intentionally arrange our data structures so that all of the data touched in the normal operation path (hot path) sits on the same cache lines, whereas data only accessed during an error (cold path) is moved elsewhere. That results in fewer cache line accesses overall, which means more of the important data stays in the cache.

In addition to hot path/cold path separation, we also often separate data by whether it is accessed on the submission or completion path. An I/O submission is processed from the top down until it is submitted to the device, but that I/O is completed at some later time in response to a separate poll call. Often, we can have a small number of common cache lines accessed in both paths, and then a submission cache line and a completion cache line that are separate. This again results in less thrashing.

Remember from earlier that we only have 400 clock cycles for each I/O. We can’t

spend any time waiting on data loads with that budget. On average, SPDK touches

5 cache lines per I/O in the NVMe driver today (there’s a breakdown in the

Pre-fetching section later). For the 4KiB at 128 queue depth benchmark with 21

SSDs, that means there are approximately 13,400 cache lines being touched at any

given time (128 * 5 * 21), which is 840KiB of data. Our Intel® Xeon® Platinum

8280L CPU has a very large L2 cache, but in practice this 840KiB plus other

cache lines touched for the code itself and other bookkeeping means our working

data set will often overflow L2. It’s critically important that we arrange the

code such that the CPU can shuffle the correct cache lines into L1/L2 with

enough advanced notice to not force the CPU to stall, so that’s what we’ll cover

next.

Dependent Loads

Modern CPUs rely heavily on speculation to achieve high performance. They do this by looking ahead many instructions to start loading the correct data into the CPU cache, hoping that the data has arrived in the cache by the time the instruction needs to be executed. It’s critical that applications structure their loads in such a way that this speculation does not get stalled. Here’s some real code (simplified) that existed some time ago:

struct nvme_request {

spdk_nvme_cmd_cb cb_fn; /* Callback function */

void *cb_arg;

};

struct nvme_tracker {

struct nvme_request *req;

/* Other stuff */

uint8_t padding[...]; /* The prp entry begins on the second cache line of the tracker */

uint64_t prp[NVME_MAX_PRP_LIST_ENTRIES];

};

The NVMe completion queue is an array of completion queue entries. Inside those entries is a CID value that SPDK provided on command submission. SPDK allocates an array of tracker objects where the index is this CID. Remember, SPDK allows users to queue up more requests than there are actual slots in the submission queue, and that NVMe allows commands to complete in any order after submission. Further, the tracker objects are what contain the PRP list, which is basically an NVMe scatter gather list. So trackers are much larger than requests and must be allocated from special memory so that the device can DMA this scatter gather list directly. This necessitates this three level hierarchy of structures.

When checking for completions, SPDK would do the following:

if (cqe->phase == phase_flipped) {

struct tracker *tr = tracker_array[cqe->cid];

struct request *req = tr->req;

req->cb_fn(req->cb_arg);

}

If we imagine ourselves speculating ahead to figure out all of the loads necessary for this function, it’s something like this:

- Load cqe to read phase bit and CID.

- Load tracker - Depends on knowing CID to calculate the address of the tracker (array offset).

- Load request - Must have loaded tracker to get pointer to request.

- Call user callback function - Must have request loaded.

We can see that the CPU effectively cannot speculate ahead on the loads here - it has to do them in serial. Luckily, the tracker objects had a bit of extra space in their first cache line before the PRP list started. So we simply changed the struct definitions to the following:

struct nvme_request {

spdk_nvme_cmd_cb cb_fn; /* Callback function */

void *cb_arg;

};

struct nvme_tracker {

struct nvme_request *req;

spdk_nvme_cmd_cb cb_fn; /* Callback function */

void *cb_arg;

/* Other stuff */

uint64_t prp[NVME_MAX_PRP_LIST_ENTRIES];

};

On request submission into an actual slot, we copy the cb_fn and cb_arg into the tracker. This is cheap because we just built the request object and set up the tracker anyway, so they’re in cache at that point. Then on the completion side the CPU is able to speculate a bit better:

- Load completion queue entry to read phase bit and CID.

- Load tracker - Depends on knowing CID to calculate the address of the tracker (array offset).

- Load request - Must have loaded tracker to get pointer to request.

- Call user callback function - Must have tracker loaded.

The key is step 4, which now can happen prior to the request being loaded into memory on step 3. Often, several checks are done inside that user callback prior to actually accessing any data in the request, so it masks the latency of that request load entirely. When a stack is as highly optimized as SPDK, these seemlingly small things can have a very large impact. This change, for example, resulted in a 500k I/O per second improvement.

Pre-fetching

Pre-fetching is a technique where cache lines are loaded into the L1 cache before they need to be referenced. This avoids CPU cycles wasted due to stalling while waiting for a cache line to be loaded. In the benchmarks above, we have 2688 I/O outstanding at any given time. Each I/O has 3 data structures associated with it:

struct perf_task- This is a data structure created by the NVMe perf tool for each I/O. It has one cache line that is touched in the main I/O path.struct nvme_request- This is a transport-agnostic data structure created by the NVMe driver for each I/O. It has three cache lines that are touched in the main I/O path.struct nvme_tracker- This is a data structure created by the NVMe driver’s PCIe transport for each I/O. It has one cache line that is touched in the main I/O path.

These code paths have already been heavily reworked to touch as few cache lines as possible using the techniques described earlier, so we can’t do any better. Now let’s look at the number of cache lines available on our Intel® Xeon® Platinum 8280L processor:

- L1 cache - 32KB, or 512 cache lines

- L2 cache - 1024KB, or 16384 cache lines

- L3 cache - 39MB, or around 630K cache lines

Now back to those 2,688 I/O. We’ll touch 5 cache lines per I/O which multiplies out to 13,400 cache lines. Once we account for all of the other cache lines not associated with these I/O specific structures, and for cache associativity, it’s clear that we’ll have a fair number of L2 cache misses. These misses are rather costly - approximately 20ns for an L2 miss that hits the L3 cache. We only have 400 clock cycles to handle the I/O, which is 100ns at 4GHz. We can’t burn 20% of our budget on an L2 miss. Luckily, this is where pre-fetching can help us.

The key pre-fetching optimization we made was on the nvme_tracker objects.

Looking back at the previous scenario involving the completion path, SPDK polls

the completion queue entry for the CID and uses that as an array index to look

up the nvme_tracker object. That nvme_tracker object points to its

associated nvme_request object and the user’s callback function and context.

When we’re driving I/O to lots of devices from a single thread, it’s likely this

nvme_tracker object has been swapped out of at least L1 cache and commonly out

of L2 as well. So we’d like to pre-fetch the nvme_tracker object at some point

earlier so that it’s ready for us when we need to access it. Unfortunately,

since storage I/O can complete out of order, we don’t know which tracker to

pre-fetch until we’ve looked at the completion queue entry. We appear to be

stuck.

Or maybe not. In fact, there is a clever trick that comes to the rescue.

In practice, when we poll for completions we rarely find just one, and this is

the key. When there are multiple completions to process, we look ahead one

completion and pre-fetch its associated nvme_tracker. For example, suppose

there are three completions ready for processing:

- Get tracker for completion #1

- Pre-fetch tracker for completion #2

- Do processing for completion #1

- Get tracker for completion #2

- Pre-fetch tracker for completion #3

- Do processing for completion #2

- Get tracker for completion #3

- Do processing for completion #3

We’ve hidden the latency on loading the trackers for completion #2 and #3 by pre-fetching that cache line while the CPU is doing processing for the previous completion. This trick only requires queue depth 2 to work, so it’s very effective.

Non-Temporal Writes

For each command we first build the command into a temporary space in our request structure, then we copy it into the command submission queue slot when one becomes available. In this scheme, the CPU never ends up reading any of the memory in the submission queue at all - it only writes it and the device later does a DMA from that location. Since the CPU never reads that memory, it doesn’t benefit much from the CPU cache.

Quantifying the benefit of the CPU cache in this scenario is a bit tricky though. To perform the copy into the submission queue ring we use one of a couple different strategies, depending on available instructions. The simplest one is the following:

uint64_t *d64 = (uint64_t *)dst;

uint64_t *s64 = (const uin64_t *)src;

d64[0] = s64[0];

d64[1] = s64[1];

d64[2] = s64[2];

d64[3] = s64[3];

d64[4] = s64[4];

d64[5] = s64[5];

d64[6] = s64[6];

d64[7] = s64[7];

That’s 8 pairs of 64 bit load and mov instructions, which is the naive implementation of an assignment generated by the compiler. If the platform has SSE2, we’ll instead use those. The code is the following:

if defined(__SSE2__)

__m128i *d128 = (__m128i *)dst;

const __m128i *s128 = (const __m128i *)src;

_mm_store_si128(&d128[0], _mm_load_si128(&s128[0]));

_mm_store_si128(&d128[1], _mm_load_si128(&s128[1]));

_mm_store_si128(&d128[2], _mm_load_si128(&s128[2]));

_mm_store_si128(&d128[3], _mm_load_si128(&s128[3]));

#else

*dst = *src;

#endif

That’s 4 pairs of 128 bit operations instead of the 8 64 bit operations. Typically, fewer operations are faster (although not always!).

Most importantly, the store instruction is going to write the data into a cache line. To perform that update, it is going to pull that cache line into the L1 data cache for the CPU core executing the instruction. The load is also going to pull the source cache line in, but since we just built the command at that location it’s probably sitting in the L1 data cache already.

The data can be written into the cache more quickly than it can be written to main memory, but in the case where the cache line is not already present in the L1 data cache, reading the cache line into L1 to do a partial update negates that benefit. The device itself can also perform a DMA directly out of the CPU cache instead of from main memory, which may be marginally faster, although benchmarking shows the net performance delta for the device DMA is negligible.

Given the above, what we really want to do is perform the stores in a way that allows us to bypass the CPU cache and go directly to main memory and avoid evicting other likely useful data. Fortunately, the x86 instruction set has a set of commands for performing what’s called a “non-temporal” write, which is a write to memory with a hint to bypass the CPU cache. That’s exactly what we want! Here’s the code:

#if defined(__SSE2__)

__m128i *d128 = (__m128i *)dst;

const __m128i *s128 = (const __m128i *)src;

_mm_stream_si128(&d128[0], _mm_load_si128(&s128[0]));

_mm_stream_si128(&d128[1], _mm_load_si128(&s128[1]));

_mm_stream_si128(&d128[2], _mm_load_si128(&s128[2]));

_mm_stream_si128(&d128[3], _mm_load_si128(&s128[3]));

#else

*dst = *src;

#endif

}

These instructions can broadcast the 64 byte cache line update without allocating a cache line in the CPU’s local L1D (L1 Data) cache, which hopefully keeps other valuable data in the L1D for later. For the above benchmark, this was a 2.5% performance improvement.

Future Work

This blog post has certainly been a bit celebratory - we hit 10M I/Ops on one thread! Hooray! So what do we do now?

There are lots of opportunities to optimize components higher up the stack in SPDK, and future posts will focus on those efforts. But we do have a few remaining ideas to make the NVMe driver even faster that we just haven’t gotten around to coding yet.

One idea involves a change to the way the completion queue is polled for phase bit flips. Each completion queue entry is 16 bytes, so there are 4 of them per cache line. This memory is being read by the CPU while simultaneously being written to by the NVMe SSD over PCIe. Because each completion queue entry is a partial cache line, the NVMe SSD has to perform a read-modify-write to update the phase bit. When the SSD sends out the partial write, it sits in a queue waiting to get serialized over the PCIe bus (because there’s 10 million operations occurring per second). Upon arriving on the tail of the queue, it starts reading in the cache line immediately (pulling data from host memory to the SSD isn’t typically stalled behind any other operations during a random read test). Then, when it gets to the head of the write queue, it modifies the previously read cache line and does an atomic write back out to host memory (direct to L3 cache with DDIO on Intel platforms). Unfortunately, if SPDK polls that cache line while the write is waiting in the queue, it steals the cache line back to the CPU. When the write gets to the front of the PCIe queue, it then sees that the cache line it read was invalidated and does a read to get it again, blocking the rest of the queue until the operation is complete.

One way we can combat this is to instead do cache-line aligned reads of 4 completion queue entries at a time onto the stack. We then process those 4 entries without polling the real location for phase bit flips again, then repeat. This clumps together the time when the CPU is touching that cache line a bit, minimizing the chance of touching it when it happens to be in the PCIe queue.

Another way, of course, is changing the NVMe completion queue entry size to be a single cache line. But that’s a wider effort and might be worse than polling 4 at a time. Only benchmarking will tell.

Another hot spot in the code occurs when calling the user-provided completion

callback function upon finding an I/O completion. From the Data Dependent Loads

and Pre-fetching sections above, remember this scenario upon finding a new I/O completion:

- Load completion queue entry to read phase bit and CID.

- Load tracker - Depends on knowing CID to calculate the address of the tracker (array offset).

- Load request - Must have loaded tracker to get pointer to request.

- Call user callback function - Must have tracker loaded.

Additionally, remember that when the user submits an I/O, they provide a function pointer and optionally a pointer to an opaque context. When we call that completion callback in step 4, the user code almost always immediately dereferences that opaque context. We’re already pre-fetching the trackers one ahead of where we’re processing completions, but we’d really like to pre-fetch the user callback and context ahead of time too. It turns out, we could extend the current pre-fetching technique to span over three completions instead of two. The algorithm would look like this:

Initial Ramp Up:

- Get tracker for completion #1

- Pre-fetch tracker for completion #2

- Pre-fetch tracker for completion #3

- Do processing for completion #1

- Get tracker for completion #2

- Get tracker for completion #3

- Pre-fetch request, callback, context for completion #3

- Pre-fetch tracker for completion #4

- Do processing for completion #2

Steady State:

- Get tracker for completion #{i}

- Get tracker for completion #{i + 1}

- Pre-fetch request, callback, context for completion #{i + 1}

- Pre-fetch tracker for completion #{i + 2}

- Do processing for completion #{i}

- Repeat with i = i + 1

This requires at least three completions each time we poll to start having a benefit, but in the benchmarks we’re performing here there are typically 30 to 50 completions waiting each time.

Wrapping Up

SPDK development is a team effort, and these techniques have been developed by a large number of people over a number of years. There’s too many contributors to thank each individually, but we would like to specifically thank Vishal Verma and John Kariuki for doing all of the benchmarking and system set up as well as Greg Tucker who provided great insight into the complexities and trade-offs of various x86 CPU instructions.