120 Million I/O Per Second with a Standard 2U Intel® Xeon® System

In 2013, Intel started the Storage Performance Development Kit (SPDK) project to reimagine the storage software stack. Although NVMe was still in its infancy, organizations integrating NVMe SSDs recognized the challenges they faced as the performance and efficiency of the storage software became increasingly critical to overall system performance. When Intel kicked off the SPDK Open-Source Community in 2015, a global phenomenon was born that was laser-focused on demonstrating the outstanding performance and efficiency enabled by running software designed for NVMe devices. In the beginning, the community demonstrated that it’s possible to build systems capable of millions of IOPS (I/O operations per second) using standard hardware. However, when solid-state media based on technologies like the Intel® Optane™ arrived, a whole new class of NVMe SSDs with higher throughput and an order of magnitude reduction in hardware latency the community saw an opportunity to redesign the SPDK NVMe driver. In 2019, a new SPDK NVMe driver capable of over 10 million IOPS (using a single CPU core!) was released and accompanied by a blog with deep technical insights on the techniques SPDK employs to achieved great performance. But the SPDK community is about both performance and scalability, so in 2021 we demonstrated a system that scaled to 80 million IOPS. We’re kicking off 2023 with another mind-blowing accomplishment.

We built a 2U Intel® Xeon® server system capable of 120 MILLION 512B random read I/O operations by combining the latest 4th Generation Intel® Xeon® Scalable Processor (code-named Sapphire Rapid) with Intel® Optane™ SSDs. The system has 2 CPUs with 80 PCIe Gen 5 lanes each, giving us plenty of PCIe lanes to connect NVMe SSDs and other I/O devices such as network cards.

| Configuration | |

| CPU | 2x Intel® Xeon® Platinum 8480+ Processor 2.0GHz |

| Memory | 16x 64GB 4800MHz DDR5 |

| Storage | 24x Intel® Optane™ SSD P5800X 800GB |

| Platform | QuantaGrid D54Q-2U |

| BIOS | 3A05 (ucode:0x2b000070) |

| OS | Ubuntu 22.04 LTS (Linux Kernel 5.15.0-41) |

| Hyperthreading | ON |

| Turbo | ON |

| Turbo Frequency | 3.8GHz |

BIG NUMBERS

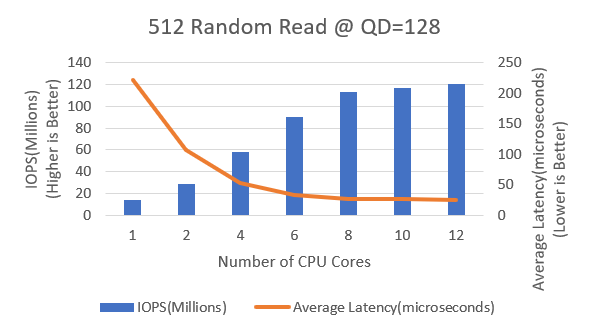

The graph below demonstrates the I/O throughput scalability of the SPDK NVMe driver with the addition of more CPU cores to perform I/O. We measured a stunning 13.91 MILLION 512B I/O per second on 1 CPU core. The IOPS scaled linearly with the addition of CPU cores until 8 cores. Above 8 CPU cores, the scaling is non-linear as the IOPS approach the limits of the SSD capabilities. At just 12 I/O processing cores, we measured over 120 MILLION IOPS at an amazing average latency of just 25.56 microseconds.

512B random reads at queue depth 128 to each device.

Is Your Storage Software ready for 120M IOPS?

If you are new to SPDK, you might be asking “where do I find a storage stack capable of 120 million IOPS?”. The SPDK open-source community provides a set of BSD licensed tools and libraries for writing high performance, scalable, user-mode storage applications. From the beginning, the community designed SPDK for low latency and high throughput “next generation” storage media, such as Intel® Optane™. There is a lot of reading material over at SPDK’s main documentation page. In particular, check out the Introduction and Concepts sections for the techniques and strategies that SPDK employs to achieve great performance.

The bedrock of SPDK is a user space, polled-mode, asynchronous, lockless NVMe driver that provides zero-copy, highly parallel access directly to an SSD from a user space application. SPDK further provides a full block stack as a user space library that performs many of the same operations as a block stack in an operating system. The community provides NVMe-oF, iSCSI, and vhost target applications build on top of the driver and block stack that are capable of serving disks over the network or to other processes. The standard Linux kernel initiators for NVMe-oF and iSCSI interoperate with these targets, as well as QEMU with vhost. These servers can be up to an order of magnitude more CPU efficient than other implementations. These targets can be used as examples of how to implement a high-performance storage target, or used as the basis for production deployments.

How’d We Achieve Linear Scalability?

In 2019, the SPDK community optimized the SPDK NVMe driver to achieve over 10 million storage I/O per second from one thread. If you’re not already familiar with how SPDK was able to hit that goal, you may want to start here. This blog explains the NVMe driver IOPS scalability.

The SPDK NVMe driver does not take locks in the I/O path, so it scales linearly in terms of performance per thread as long as a queue pair and a CPU core are dedicated to each new thread. NVMe queue pairs provide parallel submission path for I/O. Queue pairs contain no locks or atomics, however, so a given queue pair may only be used by a single thread at a time. The number of queue pairs available is dictated by the NVMe SSD itself. The NVMe specification allows for up to 65,535 independent I/O queues for submitting I/Os to an NVMe device concurrently, but most devices support between 32 and 128. Because NVMe devices have many independent submission queues, organizations developing storage applications with SPDK should consider organizing their internal data structures such that data is assigned exclusively to a single thread. All operations that require that data should be done by sending a request to the owning thread. This results in a message passing architecture, as opposed to a locking architecture, and will result in superior scaling across CPU cores.

Pinning I/O threads to CPU cores on the same NUMA node as NVMe SSDs because accessing local memory is faster than remote memory. SPDK provides the flexibility to configure storage software to run on CPU core(s) that are on the same NUMA node as the hardware it will access. DPDK provides NUMA aware memory allocation APIs with allocators that can maintain a per-core cache. The SPDK nvmeperf benchmarking application uses the DPDK Mempool library which provides a per-core cache that enables threads to use per-thread local storage without taking locks.

You can find out more information about the design of the SPDK NVMe driver at the following documentation page, which includes the APIs you need to start integrating the driver into your storage application.

How About 4KB I/Os?

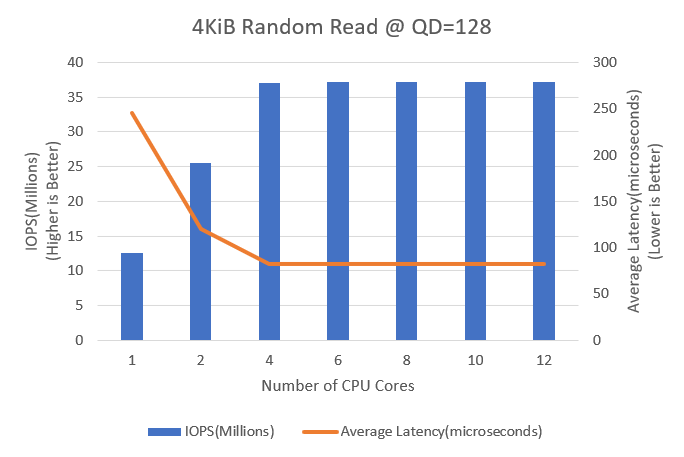

The graph below shows the scaling for 4 KiB random read I/O. We measured 12.51 million 4KiB random read I/O operations per second on a single thread. The IOPS scaled linearly as we added a second core to 25.48 million IOPS and reached a peak of 37 million IOPS with 4 CPU cores.

4KiB random reads at queue depth 128 to each device.

Test Methodology

- The data was collected using the SPDK NVMe perf tool in the examples/nvme/perf directory of the SPDK repository. The perf tool was created because off-the-shelf performance tools were not designed to get to this IOPS rate. SPDK’s NVMe perf tool has a comparatively reduced feature set, but is designed specifically to get to this level of performance.

- The 1 I/O core test was performed using a CPU core on NUMA node 0. However, as we scaled the number of I/O cores to 2, 4, 6, 8, 10 and 12, we ran two nvmeperf processes concurrently. Each nvmeperf process was configured to use CPU cores on a single NUMA node to read from SSDs on that same NUMA node.

- Each workload was run for 5 minutes and repeated three times at each CPU count.

Wrapping Up

The SPDK open-source community enables collaboration amongst developers worldwide to create innovative storage software solutions for a world that increasingly relies on data. The community follows a fully open development model with all code BSD licensed, allowing community members to integrate any or all the components under the most permissive licensing terms. There is an increasing number of companies and projects contributing to and adopting SPDK to build software-defined storage solutions for cloud, NAS, and SAN systems. SPDK is the core data-path framework enabling the emerging xPU-based storage solutions that are based on DPU/IPU-like technologies.

Join the SPDK community to build the next-generation storage software.

Notices & Disclaimer

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See configuration details. No product or component can be absolutely secure. Your costs and results may vary. Intel technologies may require enabled hardware, software or service activation. © Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Configuration Details

Test by Intel as of 10/22/2022. System: 1-node, 2x Intel® Xeon® Platinum 8480+ Processor (56 cores, HT=On, Turbo=ON), Total Memory 1024 GB (16 slots/ 64GB/ 4800 MHz), BIOS:3A05 (ucode:0x2b000070), Storage: 24x Intel® Optane™ SSD 800GB P5800X. Software: Ubuntu 22.04 LTS (Kernel 5.15.0-41-generic), GCC (Ubuntu 11.2.0-19ubuntu1) compiler, SPDK 22.05. Workload: 512B/4096B Random Read @ QD=128